'Skew' in the history of computer systems

why are computers so complicated?1 (they've gotten a lot more complicated since your typical home computer of the 1980s.2) would you do it differently if you were making a new computer from scratch? what would you do differently?

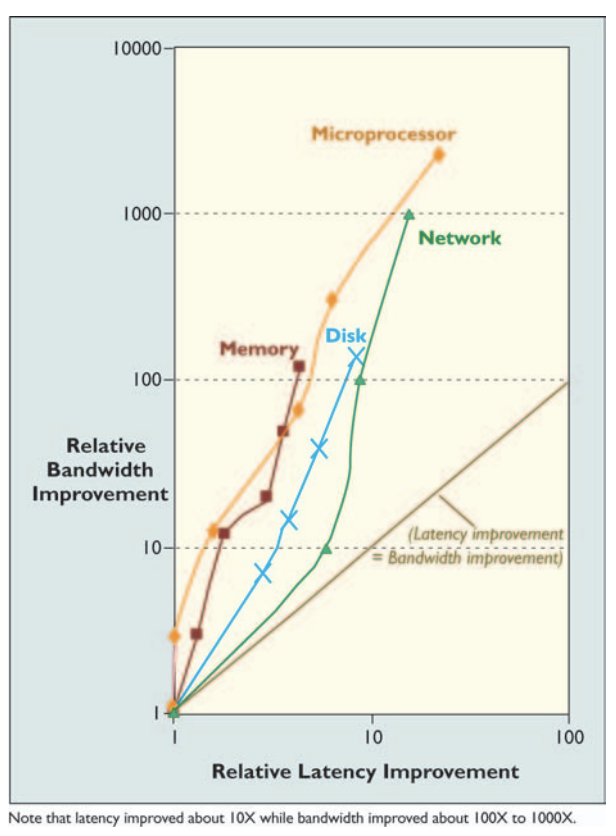

I think a lot about 'skew', where over time, one part of computers (like, say, CPUs) improves faster than another part (like, say, hard disks).3

mostly thinking out loud -- I want to make the case that this historical skew is part of why the computer ends up being so complicated -- why it's designed in ways that might not make sense if you were doing it from scratch with today's components.

you have a system that is fundamentally designed for a certain performance ratio that existed between components in 1980, and that system has to get horribly stretched and complexified to cope with the very different performance ratio between those components in 2021.

-

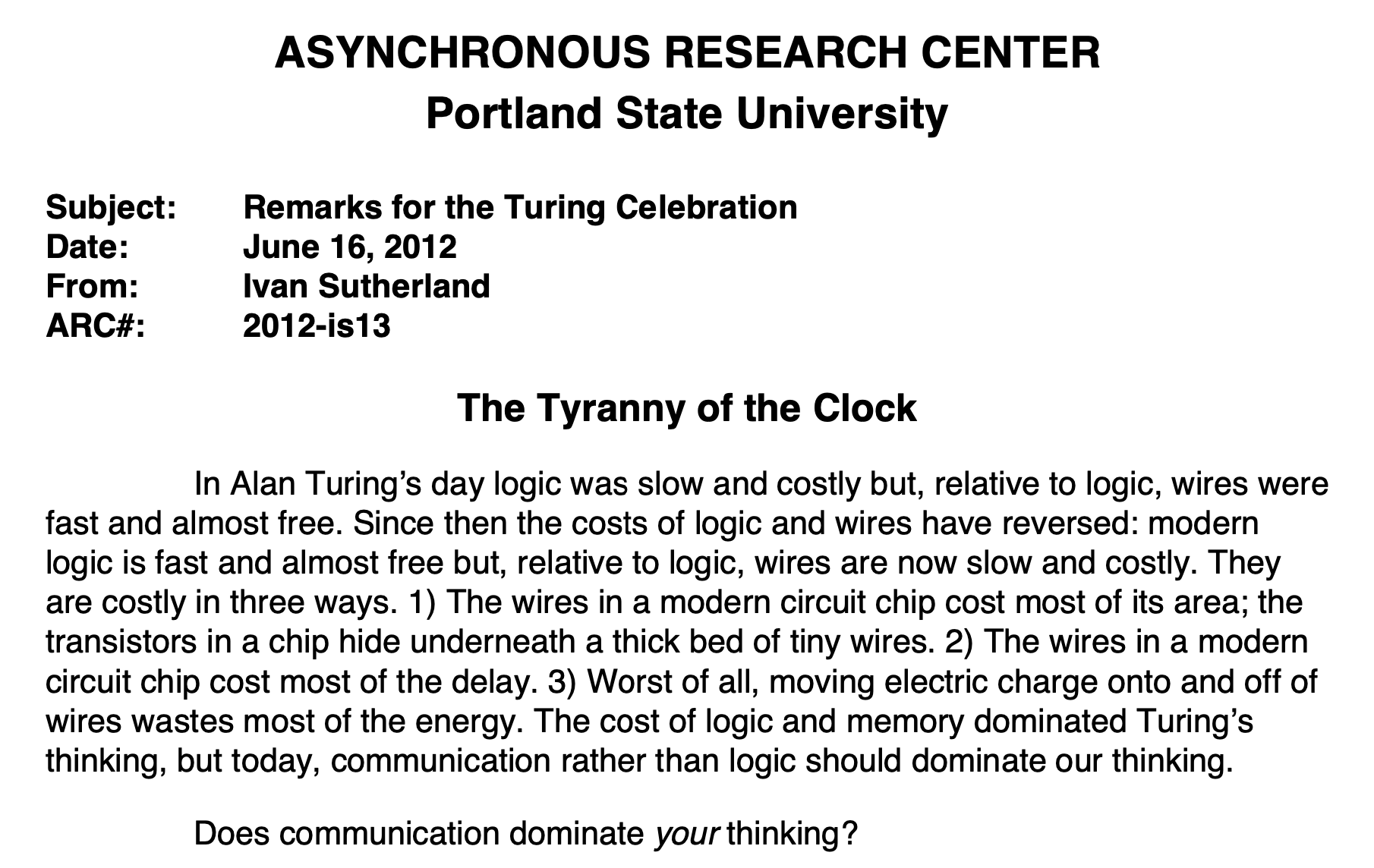

skew where in 1980, your CPU ran at comparable speed to your RAM (you could hit memory on every instruction, not much slower than doing arithmetic; you could name spots in main RAM and effectively treat those as extra CPU 'registers'), whereas in 2021, your CPU runs 400x faster than your RAM:

My first computer was a 286. On that machine, a memory access might take a few cycles. A few years back, I used a Pentium 4 system where a memory access took more than 400 cycles. Processors have sped up a lot more than memory. The solution to the problem of having relatively slow memory has been to add caching, which provides fast access to frequently used data, and prefetching, which preloads data into caches if the access pattern is predictable.

that is, they papered over the historical skew (the fact that the CPU got faster faster than RAM did) by adding more complexity.

(so that they can provide the same interface to the programmer and to existing software while being 'magically' faster -- most of the time, and in unpredictable ways, with performance cliffs. there's something dishonest about that)

-

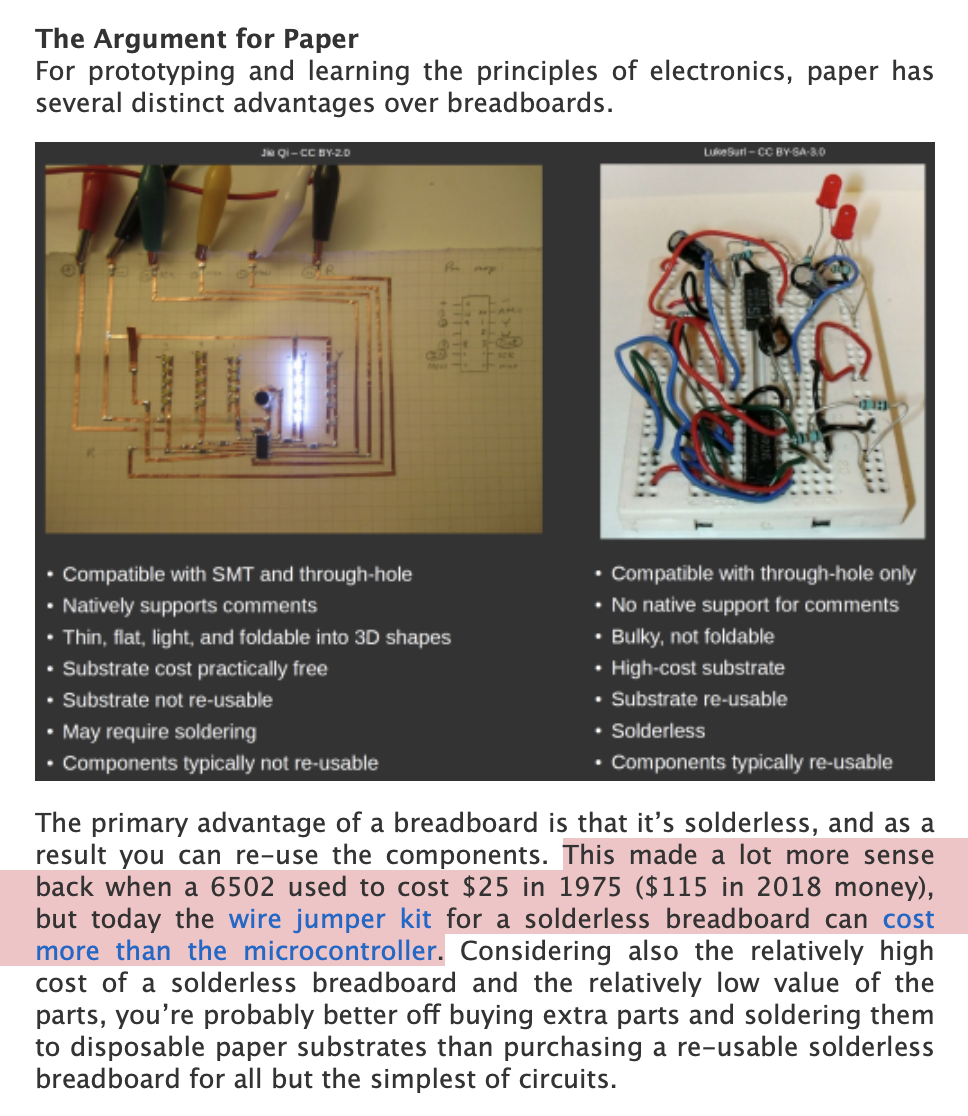

skew where a lot of your computer (CPU, network, graphics) is made out of transistors and has roughly followed a Moore's Law curve over the last 40 years -- but hard disks did not. a hard disk is a spinning platter! its seek time (its latency, the time it takes to get an arbitrary byte off the disk) doesn't improve that much over 40 years; you still have to spin the platter to get to the data.

so, for instance, swapping to disk when you ran out of RAM made sense in 1990, when disks were marginally slower than RAM; it does not make sense anymore; today, if you run out of memory and try to swap to disk, your computer will grind to a halt. that whole project of memory paging (which was the whole reason that "virtual memory" was introduced in the first place) is completely useless today!

and hard disk manufacturers make more and more on-drive caches to try to keep up with increasingly-too-fast programs (and to look good on benchmarks), which adds complexity and reduces reliability.

and there's a social consequence: it distorts the field. researchers and engineers become obsessed with filesystems and with filesystem performance, because the disk is 'low-hanging fruit' that is a bottleneck for the entire system.4

skew is why things are so complicated. there's so much built-up capital around the inappropriate abstractions that were made for the 1980s situation. you start sticking caching and speculation and stuff everywhere to get the slow parts to keep up, because you can't afford to throw your abstractions out

skew is why things are so unpredictable. the system used to work uniformly for all kinds of workloads, but when you paper it over with caches, you privilege certain uses (the common cases) over others. you create performance cliffs. you make it hard for the developer to understand what will be fast and what will be slow without testing empirically

(and you get second-order problems: memory improving slower => caching becomes important => context switching becomes expensive, because it nukes your cache => you need more complexity to make context switches fast again, or else you start to avoid them because they're slow)

anyway, a thing to do is to look at the performance ratios of different components today, and see how those ratios are different from in 2000 or 1990 or 1980 (or whenever your system design was originally set)

can you come up with new abstractions, new ways of thinking that better reflect the way the components actually relate to each other now?

(This post was originally an email sent to my GitHub sponsors. Sponsor me for more notes like this!)

-

why is it bad that they're complicated? it's disempowering; it makes it less likely that new generations of people will do new stuff; it concentrates power in these large companies and technical professions; it drives the technology in certain directions that are favored by those actors who can afford to work on it ↩︎

-

I talk about that 'typical 80s computer' because it's what you learn to program, and it may be what you remember using (it was before my time, though), but most of all, it's 'what your computer is pretending to be' in some sense

your computer puts up this abstraction/facade of being an 80s computer (because it grew from that computer in an unbroken line of descent and continued compatibility). when you program your computer, it still presents as 'a CPU that runs your instructions in order'; you don't need to think about caches and memory coherency and speculation and ...

one takeaway from all this is, maybe it's time to throw that old abstraction out; maybe it's now too far gone from the way things actually work, and the facade is more harmful than helpful / more of a constraint than a guide ↩︎

-

I think I picked this term, but the idea certainly isn't new; I kept hearing it expressed in different forms in different contexts ↩︎

-

a professor of mine in college, Dawson Engler, would talk about how if you went to SOSP in the 90s, the single biggest area of research was always filesystems, for this reason: a 10% faster filesystem meant a 10% faster computer, because the filesystem was the bottleneck. and -- most of that research is pretty useless today, and anyway, filesystems aren't that interesting! but the skew distorts what people work on ↩︎